Aitrainee presents a highly effective TTS (Text-to-Speech) model, a fully non-autoregressive TTS model, achieving state-of-the-art (SOTA) zero-shot TTS performance.

Jointly developed by Quwan Technology and The Chinese University of Hong Kong, Shenzhen.

Unlike traditional TTS models, this model employs a masked generative model with decoupled speech representation encoding, demonstrating superior performance in tasks such as voice cloning, cross-lingual synthesis, and speech control.

MaskGCT can mimic the voices of celebrities or characters from animated programs.

Zero-shot TTS system: A model that can generate natural speech without specific task-oriented training data; i.e., it can mimic any voice without training.

It does not require explicit alignment information between text and speech, nor phoneme-level duration prediction, adopting a masked and predictive learning approach. It supports controlling the total length of generated speech, adjusting prosody features such as speech rate and pauses, and can achieve emotional and tone adjustments, such as happy, sad, angry, calm, etc. It supports zero-shot speech synthesis, modifying generated speech, and supports voice conversion and cloning.

MaskGCT can learn the prosody, style, and emotion of instant speech.

Voice Conversion

Voice conversion involves transforming one person's voice into another's while maintaining the content unchanged. This technology is commonly used for voice cloning, audio editing, and personalized voice assistants.

Speech Editing

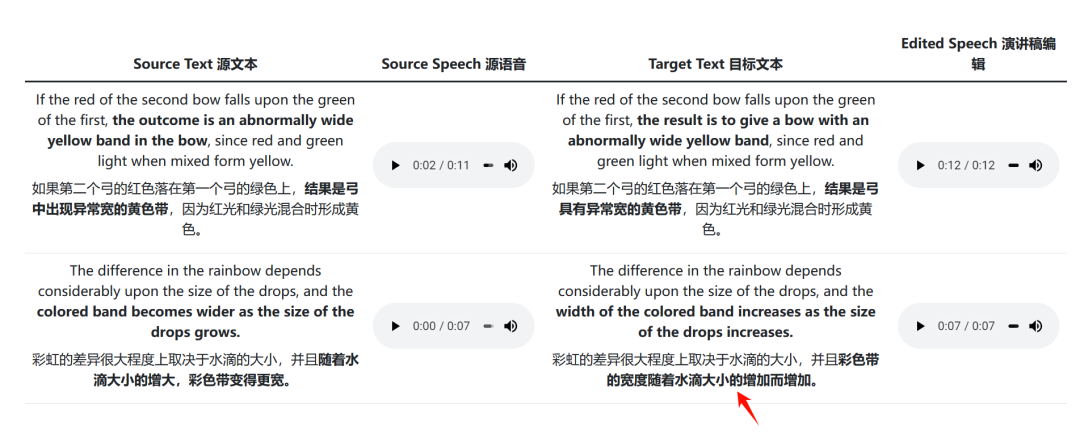

Based on the masked and predictive mechanism, the text-to-semantic model supports zero-shot speech content editing with the help of a text-speech aligner. By using the aligner, it can identify the editing boundaries of the original semantic marker sequence, mask the parts to be edited, and then use the edited text and unmasked semantic markers to predict the masked semantic markers.

"And as the size of the water droplets increases, the colored bands become wider." to "And the width of the colored bands increases as the size of the water droplets increases."

Prosody Controllability

Prosody controllability refers to the ability to adjust the rhythm and duration of generated speech. This means users can control the speed, pauses, etc., of the speech to achieve a more natural effect.

Finally, it also provides a demonstration of cross-lingual video translation functionality.

System Overview

Outperforming existing SOTA models (such as CosyVoice and XTTS-v2)

From this table, it can be seen that the MaskGCT model performs excellently in multiple metrics, especially in the following areas:

-

SIM-O (Similarity): MaskGCT's similarity score is very close to Ground Truth, particularly on the SeedTTS test sets (SeedTTS test-en and test-zh), where the SIM-O values reached 0.774 and 0.777, close to Ground Truth, outperforming other models.

-

WER (Word Error Rate): MaskGCT's WER is lower, indicating higher accuracy in speech generation. Its WER values are significantly lower than those of some competing models, particularly on the SeedTTS test sets.

-

FSD (Spectral Distance): MaskGCT's FSD values are lower than most other models, indicating that it is closer to the spectral characteristics of real speech when generating speech, with sound quality closer to real human voices.

-

SMOS and CMOS (Subjective Speech and Sound Quality Scores): MaskGCT scores close to or above other models on SMOS and CMOS, especially in "gt length" (using real speech duration as a reference), showing higher naturalness and sound quality.

Overall, MaskGCT approaches or surpasses existing SOTA models (such as CosyVoice and XTTS-v2) on multiple test sets, indicating significant advantages in naturalness, accuracy, and sound quality of speech generation.

More examples can be found here: https://maskgct.github.io/

Experience address:

https://voice.funnycp.com/audioTrans

https://huggingface.co/spaces/amphion/maskgct

Paper: https://arxiv.org/html/2409.00750v3